Proxmox Clustering using 2 nodes and ZFS mirroring

Preamble

TLDR; I like to run hypervisors, I use Proxmox now

I self-host a lot. My house has a NAS (Debian+ZFS) and a VM Hypervisor which has a reasonable number of VMs. PFsense, Unifi controller, Home Assistant, Immich, Jitsi, and of course my Mastodon instance. All normal healthy things to run yourself at home.

VMs have some real benefits, snapshots make it easy to dig yourself out of a hole, running a single hypervisor rather than a pile of SBCs generally makes sense, and with all your eggs in that one basket, you can make that hypervisor reasonably robust with decent storage and backup.

I've run on commodity hardware for the last 20 years. Typically I buy a modern mid-range desktop board with an eye to power efficiency, and then I can run an awful lot on it without a huge power bill. The last decade I've been sporting a Skylake Era i5 (First a i5-6400, later an i5-7700k), eventually with 64gb of ram.

Others choose to buy Ex-Corp servers, and feel redundant power supplies, ECC memory and remote management are features that are worthwhile; I think full depth 1u servers are an obnoxious form factor for a home setting, the noise is unbearable and the power consumption is far higher than I can tolerate - each to their own.s

I used VMWare ESXi for my home setup for over a decade, but they got bought out by Broadcom, and in a whole whirlwind of changes, they cancelled the free ESXi program. This gave me enough of a push to move to Proxmox

The last 18 months - Single Node Proxmox

TLDR; Single node Proxmox is awesome, but patching and maintenance is hard. Clustering is a logical next step

Proxmox offers some features which VMWare either doesn't offer (Local RAID via ZFS) or locks behind license keys (Backup). Proxmox allowed me to build a platform which is more robust and more pleasant to use. A single SSD has turned into mirrored SSDs, backups happen weekly to the NAS, hardware compatibility is way stronger and I don't need to buy Intel NICs just for driver support.

The problem with a single node cluster is downtime. The whole house runs through the one server, the server has been very reliable, but I can't fix it remotely if it does go wrong. More regularly, upgrades like Proxmox 8 to Proxmox 9 are a little scary with limited backout, and in any case, patching needs me to take down the internet for the whole house. The wife puts up with a lot, but...

The logical next step is Proxmox Clustering, which is available without cost (Unlike VMware) and without a huge overhead. But meaningful clustering requires shared storage, and putting all the storage on the NAS would just move the single point of failure from a standalone server to two servers and a standalone NAS—a NAS which also needs patching, and expanding, and soforth. So, first we need to work out how to do shared storage

Ceph

TLDR; Ceph is listed as a good solution with Proxmox in any number of places, it is an easy rabbit hole to go down. For small clusters, it's probably a bad fit.

Ceph is a distributed filesystem. It can store a variety of objects - blocks, file system or file objects. It's built into Proxmox as a shared storage option, and tends to get the headlines ("I build a 3 node cluster with 192tb of SSD and it's really fast. Sponsored by $SSD Manufacturer"). The Proxmox manual has a whole section on how to setup Ceph.

For my setup, it could have worked. I would have needed 3 nodes (30-40w idle each), all with a reasonable number of cores, and enough RAM to manage a complex distributed filesystem in a configuration that requires every node has a copy of all the data.

Ceph also comes with strong recommendations for a dedicated 10g network. Something I could do, but would rather not have to.

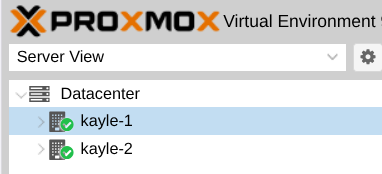

ZFS Replication

TLDR; ZFS Replication allows easy point-to-point updates between a source and a target

While Ceph has a whole section of the manual, replication is a few paragraphs in the Proxmox manual. ZFS Replication works like this

- Primary device takes a ZFS snapshot

- (First time only) the whole disk image from start until snapshot is sent to the replication target

- Every minutes, a new snapshot is taken

- The delta of the last replicated snapshot and the most recent snapshot is replicated to the target

- The oldest snapshot is deleted

- We repeat the process

The process is performed via zfs-send, which happens over SSH. Now we have a snapshot of the system which is relatively current. The process is reasonably lightweight, and all of the changes over the time period are bundled up and sent in one go, requiring far less work than tracking individual writes with Ceph.s

This isn't quite shared storage in a traditional sense, but in many cases it is good enough. You could resume a home firewall or home assistant vm from a snapshot taken 5 minutes ago, and you would likely only lose some log files. If you were running a bank or a post-office IT system, it's probably not going to be good enough.

Proxmox Clustering

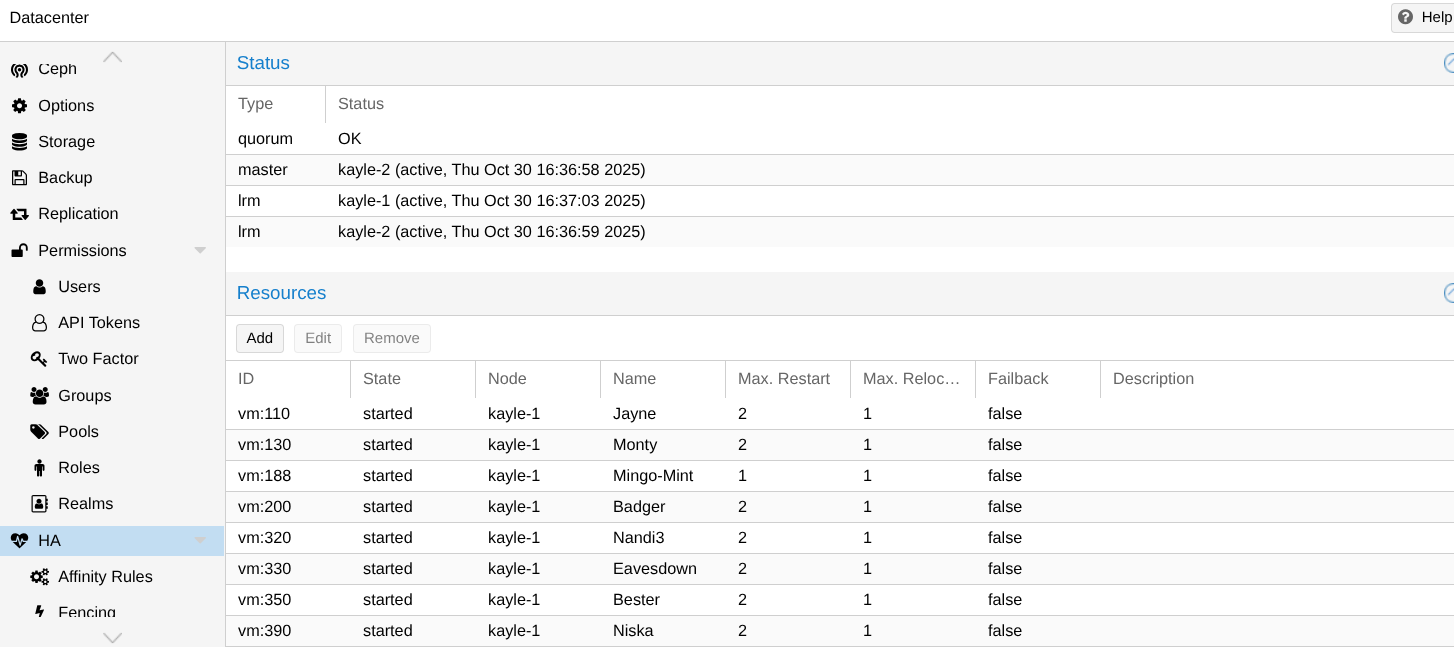

Proxmox clustering allows a number of proxmox servers to be managed through a single interface. It allows for high availability and quorum. It allows live migration of guests (Assuming you have shared storage)

QDevice

TLDR; QDevice allows a 2 node cluster to work properly, by adding a third device (Anything that runs Linux) which can help with voting

Proxmox clustering requires 3 devices. So we still, theoretically, need 3 servers. Without the 3 devices, a server can't detect if it's peer is down or the network is down. VMs both running active instances of a VM would be bad, so we need a method of quorum. With the third device, the remaining servers can work out that a peer is dead. But you don't need a full Proxmox server for that, you just need a linux machine which is on all the time. A QDevice is just that, a daemon that runs and talks to a 2 node Proxmox cluster, and allows that tie-break process to take place. It can be downloaded from apt - some use a Raspberry Pi as a cheaper and lower powered option than a full server. I have a NAS running Debian, perfect! So now I'm down to 2 Proxmox nodes and 1 Q Device (running on my NAS). The second Proxmox node adds about 25-30w of idle power, but it buys a full extra level of redundancy.

Handwavey setup guide

You'll want to setup Proxmox on 2 nodes, then configure clustering, then QDevice. Once that's done, you want to get some guests on the cluster. In my case, I shut down a non-essential guest (This webserver, as it happens), backed it up on the old cluster and restored it on the new cluster. You should be able to setup replication (Target is your standby node) and get the initial syncs performed. This was pretty quick for me, 10g ethernet and good SSDs probably helped. After that, configure High Availability for the guest, and you should be in a good position

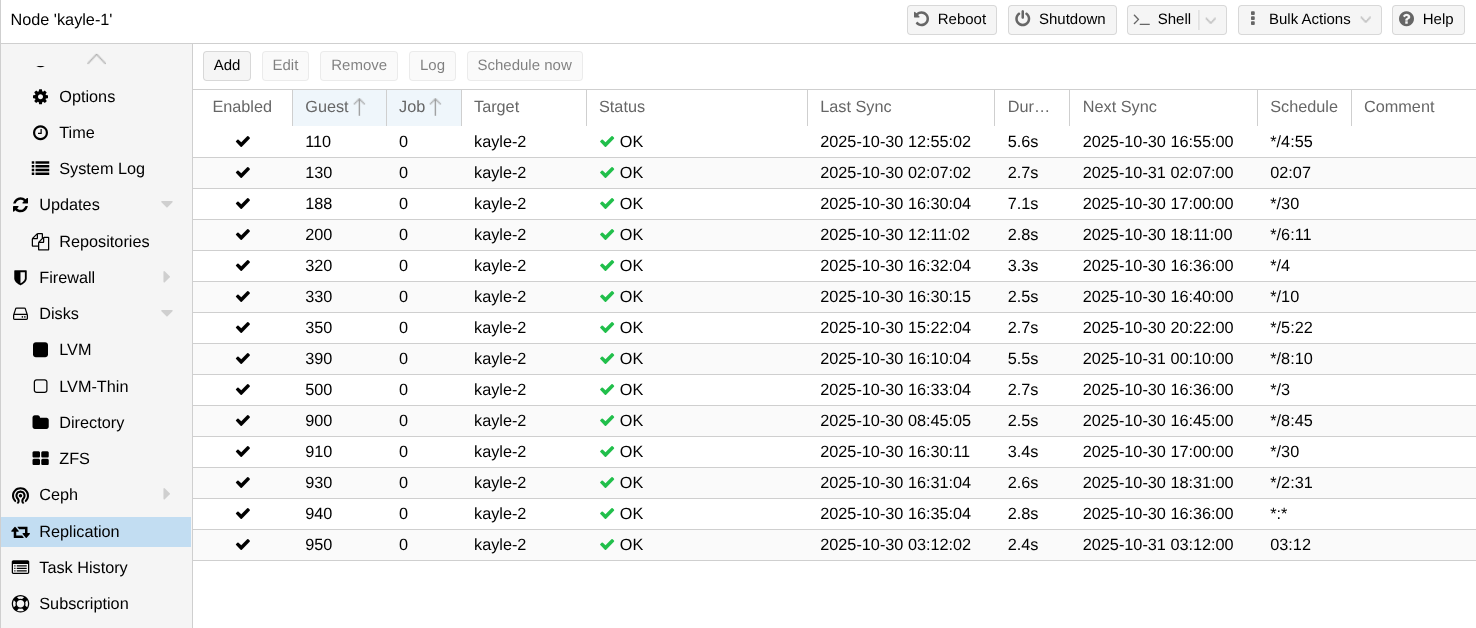

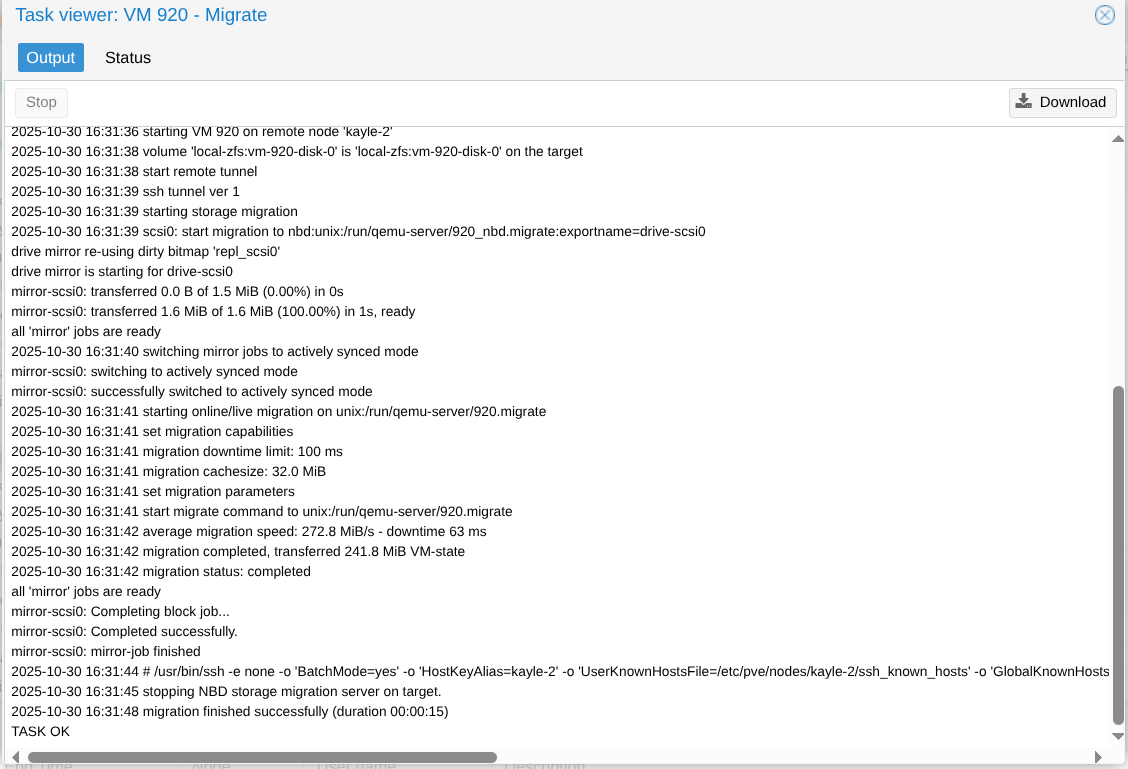

Live migration

Live migration is likely the most useful tool for me in month-to-month use. With ZFS replication, a nearly up to date disk image is present. Proxmox will update this delta, copy the memory of the VM and then shut it down on one host and 100-150ms later it will pop into life on the other hypervisor. I can failover the firewall without anybody really noticing and my PPPoE session staying up with the ISP (The FTTP ONT is connected to a switch, with a NIC on both Proxmox hosts). Once you've moved the VMs around, you're good to shut down the hypervisor, replace hardware, patch it, etc. The only caveat is that the QDevice (and your remaining node) must stay up, or the cluster will lose quorum and stop all HA services. (You could also adjust HA configuration for temporary maintenance)

Cold Migrate

Cold migrate (shut down VM or container) is the same menu option, it just "officially" moves the image to the other cluster member after making sure replication is up to date.

Server failure

Once Proxmox is configured with replication, you can configure high availability. This means that if one server detects that it can speak to the QDevice, but not the other server, it will start the VMs configured for high availability based on their most recent replicated snapshot. That means you're losing some data between the last snapshot and now, but for most home usecases this likely isn't catastrophic. You could configure some services for HA (Let's say your firewall and remote access setup) and not others (You could then manually decide if you do or don't want to lose the last few minutes). In my case, pretty much every service is setup to automatically resume

The Awkward buggers

Home Assistant

Home Assistant has a Z-Wave and Zigbee USB stick. I've not found a great way of automatically shifting these (I've found some IP controlled USB switches, but they're expensive). So I just shutdown home assistant when I want to move that. If HA migrates the guest because of server failure, I can't control Zigbee or Z-Wave until someone moves the USB port.

Jellyfin

Jellyfin is a container, and because of the way I've configured it to use a CIFS mountpoint across to my NAS, it doesn't like to live migrate. It's not super difficult to find a moment when someone isn't on jellyfin. The process is to shut it down, removing the mountpoint, migrating the container, adding the mountpoint and starting it back up again. It's a manual process, not great, not terrible.

Hardware

Just a note on the hardware I chose for the new cluster. I went with a Intel Ultra 265k, 8p cores, 12e cores. The Proxmox community doesn't love non-homogeneous architecture, but the price of this chip is the same as an 8 core AMD processor. You can easily lock some VMs to the p cores and some to the e cores, and I have a reasonably balanced set of workloads, some will be fine on e cores, some want p cores. Also the Intel has QAT, which is super useful for Jellyfin Just thought I'd mention the excellent value for money for a 20-core, 5ghz processor with very reasonable power draw. The motherboards are also OK priced, though you have to watch you get one with 4 memory slots. (I went for an MSI PRO B860M-A WIFI; the Wifi will not get used)

Conclusions

Open-source software is great, it's amazing that we have all of this capability available to use so cheaply and easily. The Proxmox team have produced something super usable and far beyond what any reasonable person could whip up themselves with KVM.

2 Node clusters are totally doable, and bring a lot of benefits. It may even allow you to use old hardware for longer with work across multiple nodes (although power efficency always needs to be weighed up)